Top Data Engineering Tools to Learn in 2026

Data engineering is a booming discipline, both because of the professional opportunities it offers and how it allows businesses to leverage data for competitive advantage. The Market Data Forecast predicts that the global big data and data engineering services market size will be worth US$325.01 billion by 2033, up from US$88.85 billion in 2025, marking a CAGR of 17.6%.

Undoubtedly, there is no better time than now to build a career in data engineering. An excellent way to start is by specialising in the use of key data engineering tools. In this write-up, we have covered the most essential data engineering tools you must master.

You don't need to learn all these data engineering tools at once; ideally, you should familiarise yourself with as many as possible and gain practical experience with at least one or two, applying them to different projects.

Data engineering tools are important in handling and engineering data for the following reasons.

- Efficient Data Management

- Process automation

- Scalability

- Data integration

- Informed decision-making

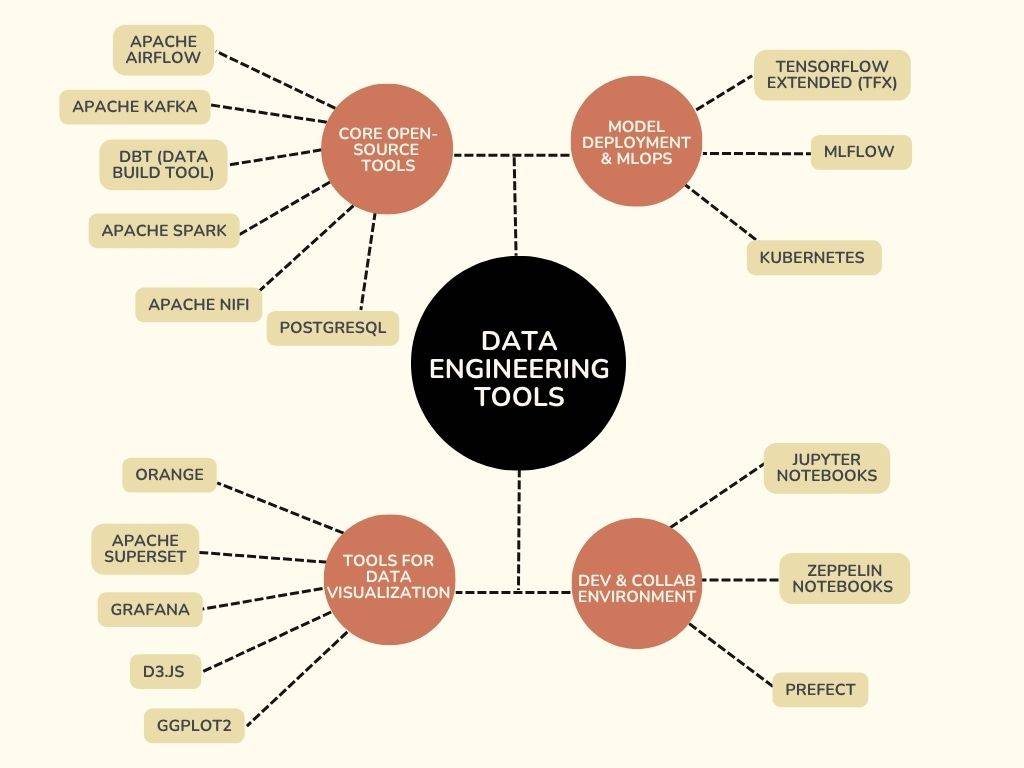

- Top Data Engineering Tools

- Core Open-Source Data Engineering Tools

- Tools Supporting Data Visualization

- Development and Collaboration Environments

Top Data Engineering Tools

Core Open-Source Data Engineering Tools

The work of the Data Engineer consists of building, maintaining, and optimising complex data flows, from information extraction to large-scale storage. An essential part of the technology stack for data teams around the world is now open-source tools. Following are some of the most used ones.

1. Apache Airflow – Pipeline Orchestration

Apache Airflow has become the de facto standard for automation and scheduling workflows involved in data engineering. It allows one to define pipelines as code-in Python and manage dependencies between complex tasks efficiently in order to be properly executed and monitored. Airflow supports many integrations with major cloud providers, such as AWS, GCP, and Azure, and is able to handle complex DAGs for batch workflows.

5. Apache NiFi – Data Flow Automation

NiFi has a graphical drag-and-drop interface that facilitates the design and automation of complex data flows. The users drag and drop components to route, transform, and move data from systems to other target systems. It is user-friendly, even for non-programmers. It is particularly useful for any IoT use case that includes data ingestion or involves ETL automation.

6. PostgreSQL – Robust and scalable relational database

PostgreSQL is a powerful, flexible, and extensible open-source relational database. Many engineers choose it for data staging, intermediate modelling, or as a transactional engine in analytical solutions.

Tools Supporting Model Deployment and MLOps

One of the primary objectives of data science is to create machine learning models from data. The models can be of logical, geometrical, or probabilistic type. These tools fill the gap between data engineering and data science, especially in managing machine learning pipelines and deployment. Below are some tools you can use to build and deploy models:

TensorFlow Extended (TFX)

TFX is suitable for data engineers working on machine learning pipelines, covering data validation, model training, and serving. It has a broad set of tools and libraries that address various stages of an ML pipeline.

MLFlow

MLFlow is a platform for managing the machine learning life cycle: building and packaging to deploying models. If you are experimenting with various tools or building several models, MLFlow will help you manage all of them from one location. It lets you integrate with the product to include libraries, languages, and algorithms.

Tools Supporting Data Visualization

Data visualization should do more than representing data graphically. It should be scientific, visual, and, most of all, with great information. Here are some tools to visualize your data engineering projects.

Orange

Orange is a tool for data visualization, easy to use and characterized by numerous features. Designed for beginners and controlled via a graphical interface, it shouldn't be underestimated: it allows the user to create statistical distributions, box plots, decision trees, hierarchical clustering, and linear projections.

Apache Superset

Apache Superset is an open-source data exploration and visualization platform. It enables data professionals to create data insights through complex dashboards and interactive reports. A large number of customizations are available, and it provides visual reports on trends, anomalies, and key insights.

Grafana

Grafana is an open source data visualization and monitoring solution that allows data engineers to collate all data sources into a single dashboard for better data monitoring and troubleshooting.

D3.js (Data-Driven Documents)

D3.js enables the visualization of data in web browsers with the help of HTML, SVG, and CSS. It finds wide application in data science because it offers animation and interaction in visualizations.

ggplot2

ggplot2 helps you in making pleasing and elegant visualizations using R. If you want to amaze your audience with well-structured visual graphics, then ggplot2 is your go-to option.

Development and Collaboration Environments

Like any other discipline in programming, writing and deploying data science code can be done more effectively and efficiently with the help of an Integrated Development Environment. IDEs offer code suggestions, allow you to run tests, easily detect errors, and even run code with plugins. Here are some IDEs related to data engineering:

Jupyter Notebooks

Jupyter Notebooks is a web application that hosts code, data, annotations, equations, and more in an interactive document. This is a great tool for collaborating on a project with other data scientists.

Prefect

Prefect is a Python-native workflow orchestration tool for building, scheduling, and monitoring data pipelines and other complex workflows. Emphasizing observability and flexible deployment, it has a smart, developer-friendly experience.

Kubernetes

Kubernetes is an application management tool that simplifies the orchestration of the whole process. It brings data engineers the possibility to run data processing work on the most reliable and scalable basis. It automatically controls the deployment, scaling, and operational management of complex data pipelines, enabling them to remain consistently operational across different environments.

Zeppelin Notebooks

Zeppelin Notebooks Apache Zeppelin Notebooks are a web-based environment for data analysis supporting multiple languages like Python, SQL, and Scala. With the aid of Zeppelin, one can explore, share, analyze, and visualize data in one place.

Conclusion

Data engineering tools are no longer an option for businesses; they form the backbone of every successful data-driven firm. These tools, through their scalable, automated, and collaborative solutions, turn the raw data into a reliable asset, thereby making the businesses flexible, cutting down on costs, and winning in an already complex data world by employing better data technology.

Call 8585951111

Call 8585951111

Rashmi Karan is a writer and editor with more than 15 years of exp., focusing on educational content. Her expertise is IT & Software domain. She also creates articles on trending tech like data science,